During my PhD I studied motion vision in the lab of Andrew Straw. I could show that a specific group of neurons makes the difference when tracking very fast moving objects, and that motion processing is essential to find objects that move very fast – at least in the insect brain. That is the topic of my published PhD work.

How fast are the fastest objects we can still see? And more importantly, which part of the brain determines if we can still see it, or not? These are some of the questions I answered during my PhD research. To do that, I used fruit flies. Why flies? Well, first of all, for flies, motion vision is very important if they want to get anywhere while flying around. Secondly, we know enough about flies to have the basics out of the way – and get to the interesting bits. Also poking around in people’s heads is frowned upon.

It turns out that flies can track slow objects without needing a specialized motion vision pathway. But if the object becomes fast enough, just position is not enough to keep track of it.

Motion vision is the ability to interpret changes in brightness and determine that either yourself, or something around you is moving (a fast car for instance, or a friendly fellow human). We can study motion vision very easily in the fruit fly. It is small, and because it flies, it relies on motion vision to orient itself and to track objects in its environment as well.

Very simple networks in the brain can already do motion vision, and that is theoretically enough for object tracking too, especially for fast objects. In the lab, I tested this in real flies, and we found that that theory holds up surprisingly well in practice, given how simply it is modelled. We could also measure how well the fly performed at which speeds very precisely, and do that for flies that have been genetically altered to block the firing of specific neurons. By comparing them to ‘wild type’ flies, we could see what contribution these neurons make.

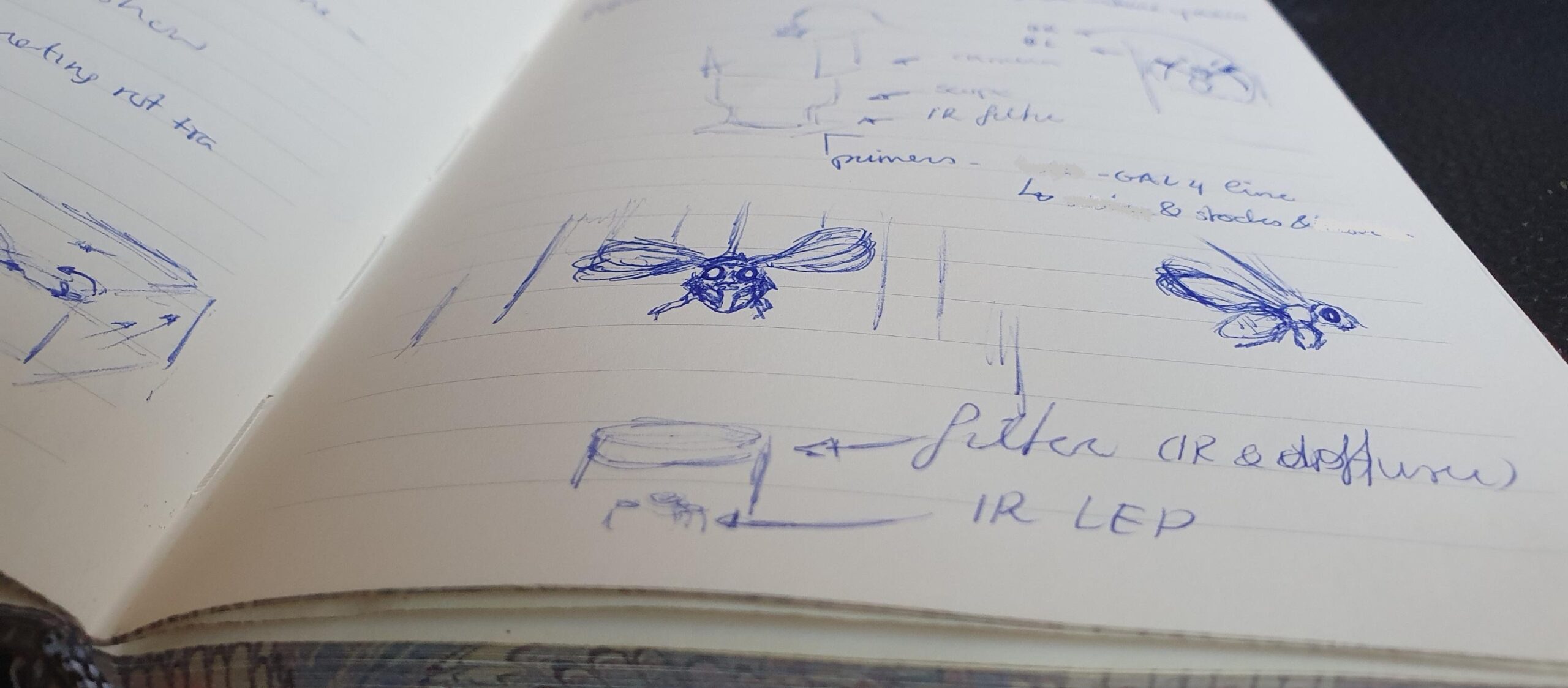

To do that, we fixed a fly in a tiny cylindrical virtual reality flight simulator, where the fly tracks a vertical stripe in a simplified world. We filmed the fly with a camera to measure the wing angles. That is an indicator of the turns the fly makes, and we use that information to turn the virtual world on the display accordingly, in real time. In that way, we know the fly tracks an object when it stays in front in the virtual world. To keep it there, the fly must keep flying straight ahead.

To test how much the various neuron types contribute, just letting the fly track an object is not enough – it’s a task that the fly can do rather easily. Since we want to figure out the limits of object tracking, we had the fly track a moving object in the virtual world. This object moves back and forth faster and faster the more time goes by in each experiment (in a logarithmic chirp oscillation). That means the fly now has to keep up with those oscillations to keep the object in front.

You can read more in this publication: A unifying model to predict multiple object orientation behaviours in tethered flies.